Abstract

In this paper, we address the problem of reducing the computational burden of Model Predictive Control (MPC) for real-time robotic applications. We propose TransformerMPC, a method that enhances the computational efficiency of MPC algorithms by leveraging the attention mechanism in transformers for both online constraint removal and better warm start initialization. Specifically, TransformerMPC accelerates the computation of optimal control inputs by selecting only the active constraints to be included in the MPC problem, while simultaneously providing a warm start to the optimization process. This approach ensures that the original constraints are satisfied at optimality. TransformerMPC is designed to be seamlessly integrated with any MPC solver, irrespective of its implementation. To guarantee constraint satisfaction after removing inactive constraints, we perform an offline verification to ensure that the optimal control inputs generated by the MPC solver meet all constraints. The effectiveness of TransformerMPC is demonstrated through extensive numerical simulations on complex robotic systems, achieving up to 35x improvement in runtime without any loss in performance.

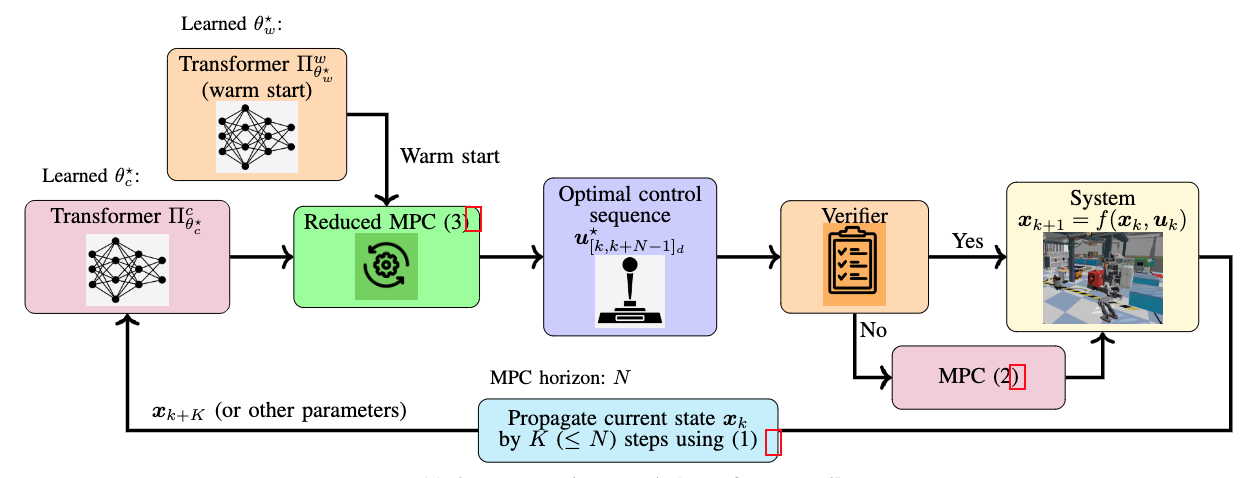

Proposed Approach

Our proposed approach improves the computational efficiency of the MPC framework by incorporating a transformer-based attention mechanism to determine active constraints as well as better warm start initialization, which can be integrated with any state-of-the-art MPC solver. Additionally, we have a verifier step that checks whether the optimal control sequence synthesized using our simplified MPC problem with fewer constraints satisfies all the constraints of the original MPC problem. Note that TransformerMPC is applicable to general nonlinear MPC problems as well.

Learning Phase: In the learning phase, the transformer is trained on a dataset containing various MPC problem instances, where each instance is characterized by parameters such as initial conditions, reference trajectories etc. The transformer learns to map these parameters to the corresponding active constraints. Another transformer model is used for better warm start initialization that predicts a control sequence close to the optimal control sequence.

Execution Phase: In the execution phase, these trained models are used for real-time prediction of the active constraints for a given set of parameters as well as for better warm start initialization. This allows the MPC solver to ignore all the inactive constraints and focus solely on the active constraints, thereby reducing the computational burden while ensuring that the control objectives are met.

Environments

For our experiments, we consider three realistic MPC problems that are common in the robotics community. The first is that of balancing an Upkie wheeled biped robot. Second, we consider the problem of stabilizing a Crazyflie quadrotor from a random initial condition to a hovering position. Finally, we consider the problem of stabilizing an Atlas humanoid robot balancing on one foot. All benchmarking experiments were performed on a desktop equipped with an Intel(R) Core(TM) i9-10900K CPU @ 3.70GHz and an NVIDIA RTX A4000 GPU with 16 GB of GDDR6 memory.

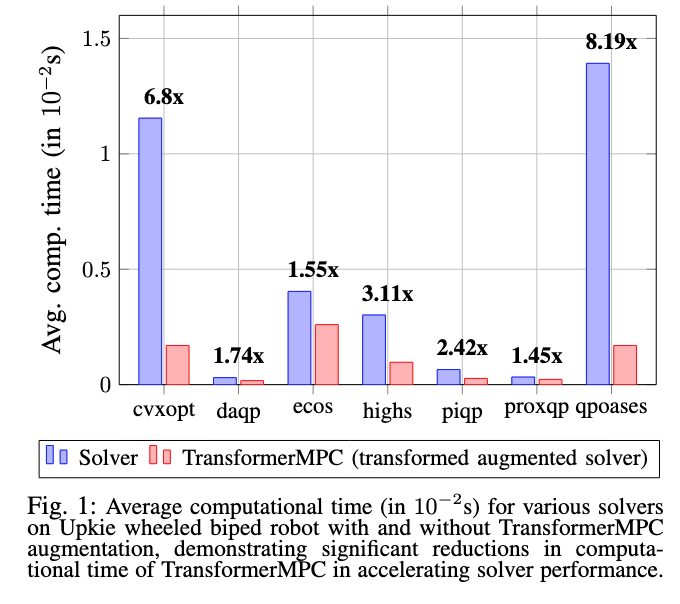

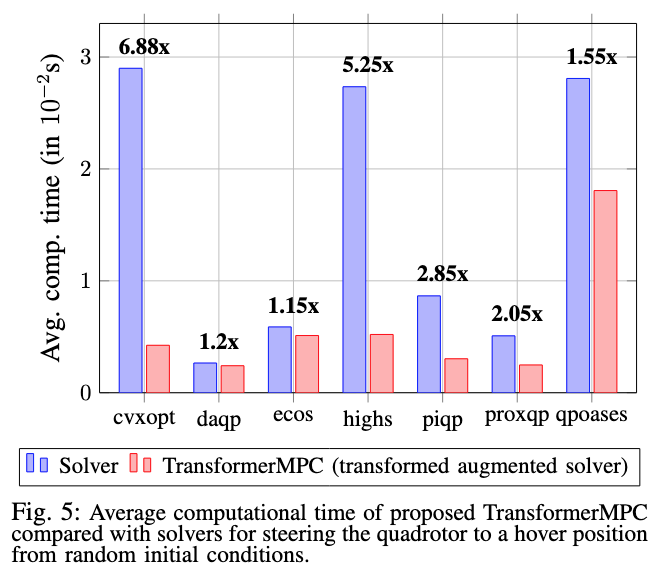

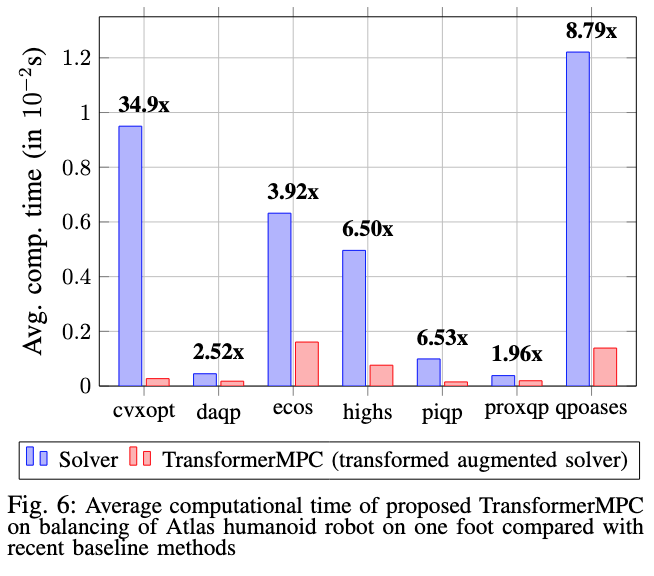

Computational efficiency

For the wheeled biped robot, the TransformerMPC significantly reduces computational time across all solvers. Notably, for CVXOPT (in 1e-2s), there is a reduction from 1.1549 to 0.1698 (6.8x improvement), and qpOASES (in 1e-2s) from 1.3923 to 0.1698 (8.19x improvement). Even solvers with initially lower computational times, such as DAQP and ProxQP (in 1e-2s), benefit from TransformerMPC, with reductions to 0.0174 and 0.0227, respectively. Furthermore, for the quadrotor, TransformerMPC demonstrates consistent performance improvements across all solvers. CVXOPT's runtime (in 1e-2s) decreases from 2.8996 to 0.4241 (6.88x improvement), while qpOASES (in 1e-2s) shows a reduction from 2.80854 to 1.80683 (1.55x improvement). The most significant gains are observed in HiGHS (in 1e-2s), where the runtime drops from 2.7345 to 0.5208. Finally, for the problem of Atlas balancing on one foot, CVXOPT runtime (in 1e-2s) has a reduction from 0.95 to 0.0272 (34.9x improvement), while qpOASES (in 1e-2s) sees an 8.79x reduction from 1.221 to 0.1389. Other solvers like PIQP and HiGHS also demonstrate substantial improvements of 6.53x and 6.50x, respectively. Even for lower-time solvers like DAQP and ProxQP, TransformerMPC achieves notable gains, reducing the time by 2.52x and 1.96x, respectively.

Computational efficiency

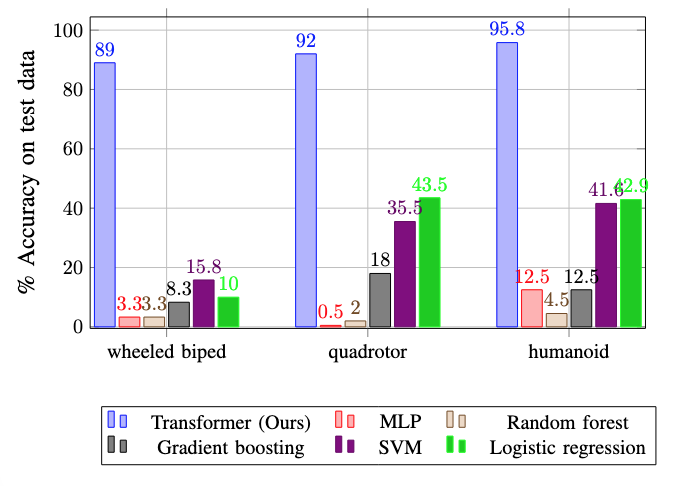

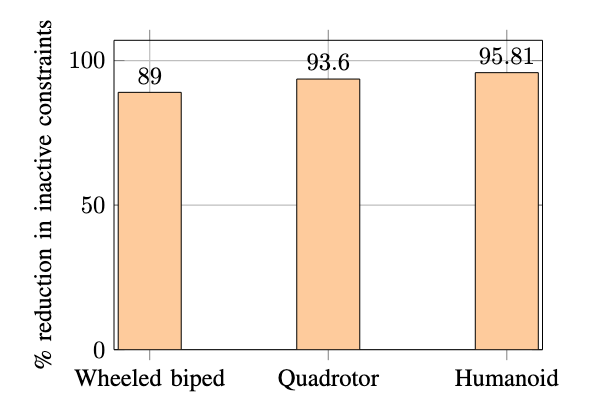

For these numerical experiments, we employed an 80-20 train-test split of the dataset, using 80% of the data for training the models and 20% for testing. Figure 1 presents the prediction accuracy on test data for different learning models in predicting inactive constraints across the three robotic systems. Among the models, the learned transformer model consistently achieves the highest accuracy, with 89% for wheeled biped robot, 92% for quadrotor, and 95.8% for humanoid. In contrast, other learned models, including MLP, Random Forest, Gradient Boosting, Support Vector Machine (SVM), and Logistic Regression, show significantly lower accuracy across all systems. These results highlight the efficacy of the learned transformer model in accurately predicting inactive constraints. TransformerMPC achieves significant inactive constraint reductions across all systems, with an 89.0% reduction for the wheeled biped, 93.6% for the quadrotor, and 95.8% for the humanoid. These results demonstrate the effectiveness of TransformerMPC in simplifying the MPC problem by removing inactive constraints, particularly for high-dimensional and complex systems such as the wheeled biped and the Atlas humanoid robot, thereby enhancing computational efficiency.

BibTeX

@article{zinage2024transformermpc,

title={TransformerMPC: Accelerating Model Predictive Control via Transformers},

author={Zinage, Vrushabh and Khalil, Ahmed and Bakolas, Efstathios},

journal={arXiv preprint arXiv:2409.09266},

year={2024}

}